5 minutes

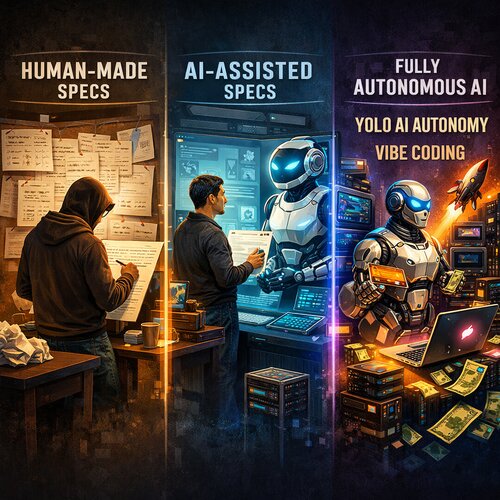

Harness: Who Really Designs Your Software — You or the AI?

So, who should control how a system is designed? The more and more I use AI, I want to delegate that task to the AI. Here’s what I’ve done so far, building on The Ralph Wiggum workflow. Here are the workflows that I’ve worked with. And here are the struggles that I have with each. With each new iteration of exploring concepts and strategies, the desire to go faster is always the core concept. But also getting quality out of the work that you are performing.

Human-Made Specs

The first concept pretty much is just hand a huge spec that was generated by you to an AI and have it generated right off the get-go. Not in one shot, but in some orchestration and scaffolding that splits up the problem into topics of concern, makes an implementation plan from a guided resource file of what you expect to be the packages used within the application. This entire autonomous, non-interactive approach from the base specification document being the only interactive part.

The cons of this are that it takes way too long to generate this spec document. You can spend a day or a week on it, and you can work with agents to clarify it and make sure it has no contradictions. But let’s say mostly in this type of concept, it’s all generated by you from your ideas and spun ideas from AI. AI didn’t generate any of the specs, it was all your handwriting or VTT (voice to text).

AI-Assisted Specs

For AI-assisted specs, you talk with an agent to generate the specifications that you need for your application. The agent will generate the files associated with the specs and the domains and the topics of concern and split it and organize it as needed. This is a process that the agent itself will relay back to the human in the process, in the loop. This process has its own faults, though.

Similar to the first approach, there is the one-on-one conversation with agents. But now the agents are producing the specs. This can lead to concealment of information. Sometimes the agent will add things to the specifications that were not clarified by you. It will generate feature creep. This can be good. This can be bad. This is how misalignment occurs. So maybe documenting the entire conversation that you have with the agent and then doing an evaluation on the conversation, your prompts, and the outcome and seeing how far it misaligns would be a good way to process this information effectively. And also have the speed of the agent constructing the specifications.

AI-Generated Specs

For AI-generated specs, I will place into two categories. One, where the agent generates all the specs from a plan. And then a human is in a loop to refine them. And then the second, where the agent plans it and then just implements it. A purely non-interactive system only at the AI’s own devices and led by the prompts and the scaffolding engineered for the AI’s harness.

Human Refinement

Great. They spent all night working on a plan, with multiple files generated on how a process should be created. Let’s say there are 4,000 lines of plan specifications from this process. Now you, the human, are to review it and see what you like, what you don’t like, just from the initial prompt.

You may have set up OpenClaw on a cron job to generate these plans and then seemingly guide it, which would fall into the AI-assisted specs, but not purely because you’re never looking at the specs themselves. And if it’s over mobile, you’re definitely not giving your AI agent full specifications like you would when you’re in the zone.

Once the specs are generated, you take the very long and arduous process of understanding the specifications that the agent has made. This can take a while and several hours of time asking questions, verifying how the system works, understanding that it’s not exactly what you wanted, but hey, you got something. And, you know, the agent is working on what it knows and just building on top of it. This can lead to a huge misalignment in understanding. But when does this need to be steered? When does this need to be re-evaluated, reconsidered? What’s most optimal here? Is it only three runs of planning and then a round of refinement? How is it best to optimize this approach?

YOLO AI Autonomy (Vibe Coding)

Okay, so you just got OpenClaw and you decided that you wanted to just YOLO it. Or you just got Claude Code and you’re like, I’m just going to run in a loop all night and experiment what it does. Cool. Do it. Experiment. Figure it out. I wish I did explore this more of an option when I first began programming with agentic coding. But I stopped after the first time and didn’t really find it super curious because I knew how misaligned it would be. And I still had that human attribute, which I think is really detrimental to me in my experience when it comes to AI.

So you just give it a prompt and see what it builds. It can work on the thing for hours. You can give it some guardrails, some scaffolding mechanism, some prompting to make it more optimized. And it just builds whatever the heck it thinks it needs to build. And it generates planning. It looks at ways to optimize because it sees problems. It creates its own ecosystem to run the system because it has no users. And then you tell it to go out into the world and make it a thing. Why not? Why can’t you just do this?

This would be a great thought experiment. Just let it free roam and give it the ability to do whatever it wanted. Let it LIVE, RIP IT. Hell, you’re going to have to give it a credit card so that it can buy stuff and set up those servers, but hey, it might come back and give you a profit or just a huge bill.